Reinforcement Learning from Human Feedback (RLHF): a detailed guide

Reinforcement Learning from Human Feedback(RLHF) is a Machine Learning (ML) technique that uses human feedback to ensure that large language models (LLMs) or other AI systems offer responses similar to those formulated by humans.

RLHF learning is playing a revolutionary role in the transformation of artificial intelligence. It is considered the industry-standard technique for making LLMs more efficient and accurate in the way they mimic human responses. The best example is ChatGPT. When theOpenAI team were developing ChatGPT, they applied RLHF to the GPT model to make ChatGPT able to respond as it does today (i.e. in a quasi-natural way).

Essentially, RLHF includes human input and feedback in the reinforcement learning model. This helps improve the efficiency of AI applications and aligns them with human expectations.

Considering the importance and usefulness of RLHF for developing complex models, the AI research and development community has invested heavily in this concept. In this article, we've tried to popularize its principle, covering everything you need to know about Reinforcement Learning from Human Feedback (RLHF), including its basic concept, operating principle, benefits and much more. If there's one thing to remember, it's that this process involves the mobilization of moderators / evaluators in the AI development cycle. Innovatiana offers these AI evaluation services: don't hesitate to contact us !

Contents: our detailed guide to RLHF

What is Reinforcement Learning from Human Feedback (RLHF) in AI?

Reinforcement Learning from Human Feedback (RLHF) is a machine learning (ML) technique that involves human feedback to help ML models self-learn more effectively. Reinforcement learning (RL) techniquestrain software/models to make decisions that maximize rewards. This leads to more accurate results.

RLHF involves incorporating human feedback into the reward function, leading the ML model to perform tasks that correspond to human needs and goals. It gives the model the power to correctly interpret human instructions, even if they are not clearly described. In addition, it also helps the existing model to improve its ability to generate natural language.

Today, RLHF is deployed in all applications of generative artificial intelligence, particularly in large-scale language models (LLMs).

The fundamental concept behind RLHF

To better understand RLHF, it is important to first understandreinforcement learning (RL) and the role of human feedback in it.

Reinforcement learning (RL) is an ML technique in which the agent/model interacts with its environment to learn how to make decisions. To do this, it performs actions that impact its environment, moves it to a new state and returns a reward. The reward it receives is like feedback, improving the agent's decision-making. This feedback process continues again and again to improve the agent's decision-making. However, it is difficult to design an effective reward system. This is where human interaction and feedback come in.

Human feedback addresses the shortcomings of the reward system by involving a human reward signal through a human supervisor. In this way, the agent's decision-making becomes more efficient. Most RLHF systems involve both the collection of human feedback and automated reward signals for faster training.

RLHF and its role in large language models (LLMs)

For the general public, including specialists outside the AI research community, when we hear the term RLHF, it is mainly associated with large-scale language models (LLMs). As mentioned earlier, RLHF is the industry-standard technique for making LLMs more efficient and accurate at mimicking human responses.

LLMS are designed to predict the next word/token in a sentence. For example, if you enter the beginning of a sentence into a GPT language model now, it will complete it by adding logical (or sometimes hallucinated) locutions. sometimes hallucinated or even biased - but that's another debate).

However, humans don't just want the LLM to complete sentences. They want it to understand their complex requests and respond accordingly. For example, suppose you ask the LLM to "write a 500-word article on cybersecurity". In this case, it may interpret the instructions incorrectly and respond by telling you how to write an article on cybersecurity instead of writing an article for you. This happens mainly because of an inefficient reward system. This is exactly what RLHF implies for LLMs. Do you follow?

RLHF enhances the capabilities of LLMs by incorporating a reward system with human feedback. This teaches the model to consider human advice and respond in a way that best aligns with human expectations. In short, RLHF is the key to LLMs making human-like responses. It could also, in a way, explain why a model like ChatGPT is more attentive to courteously phrased questions (as in life, a "please" in a question is more likely to get a quality answer!).

Why is RLHF important?

The artificial intelligence (AI) industry is booming today with the emergence of a wide range of applications, including natural language processing (NLP), autonomous cars, stock market predictions and more. However, the main aim of almost all AI applications is to reproduce human responses or make human-like decisions.

RLHF plays a major role in training AI models/applications to respond in a more human-like way. In the RLHF development process, the model first gives a response that somewhat resembles the one that might be formulated by a human. Then, a human supervisor (such as our team of moderators at Innovatiana) gives direct feedback and a score to the response based on various aspects involving human values, such as mood, friendliness, feelings associated with a text or image, and so on. For example, a model translates a text that seems technically correct, but may seem unnatural to the reader. This is what human feedback can detect. In this way, the model continues to learn from human responses and improves its results to match human expectations.

If you use ChatGPT regularly, you'll no doubt have noticed that it offers options and sometimes asks you to choose the best answer. You've unknowingly taken part in its training process, as a supervisor!

The importance of RLHF is evident in the following aspects:

- Making AI more accurate: It improves model accuracy by incorporating an additional human feedback loop.

- Facilitatingcomplex training parameters: RLHF helps in training models with complex parameters, such as guessing the feeling associated with a piece of music or text (sadness, joy, particular state of mind, etc.).

- Satisfying users: It makes models respond in a more human way, increasing user satisfaction.

In short, RLHF is a tool in the AI development cycle for optimizing the performance of ML models and making them capable of mimicking human responses and decision-making.

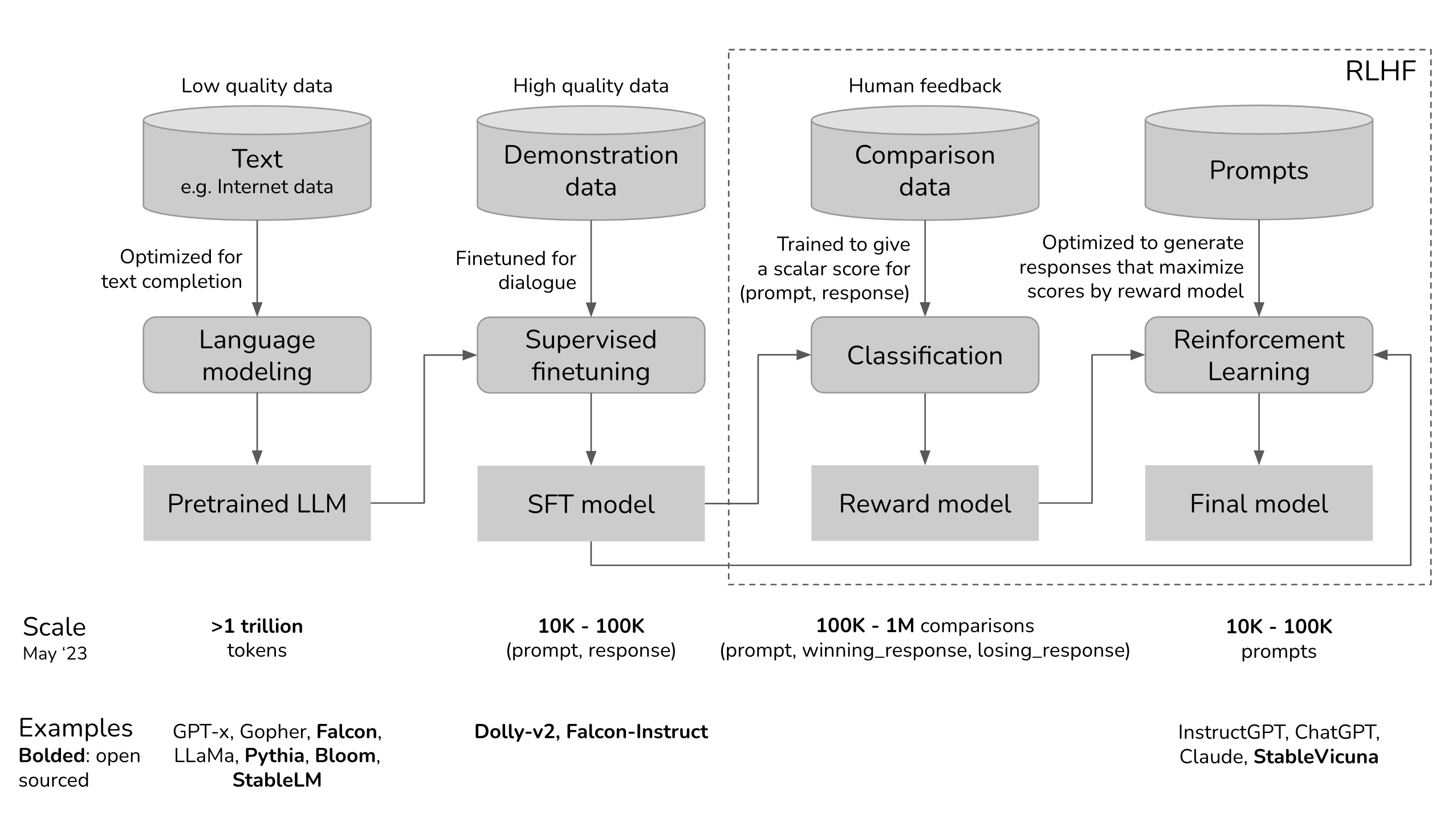

RLHF step-by-step training process

ReinforcementLearning from Human Feedback (RLHF) extends the self-supervised learning that large-scale language models undergo as part of the training process. However, RLHF is not a self-sufficient learning model, as the use of human testers and trainers makes the whole process expensive. Consequently, most companies use RLHF to fine-tune a pre-trained model, i.e. to complete an automated AI development cycle with human human intervention.

The step-by-step RLHF development/training process is as follows:

Step 1. Pre-training a language model

The first step is to pre-train the language model (such as BERT, GPT or LLaMA to name but a few) using a large quantity of textual data. This training process enables the language model to understand the different nuances of human language, such as semantics or syntax, and of course the language itself.

The main activities involved in this stage are as follows:

1. Choose a basic language model for the RLHF process. For example, OpenAI uses a lightweight version of GPT-3 for its RLHF model.

2. Gather raw data from the Internet and other sources and pre-process them to make them suitable for training.

3. Train the language model with data.

4. Evaluate the post-training language model using a different data set.

In order for the LM to acquire more knowledge about human preferences, humans are involved in generating responses to prompts or questions. These responses are also used to train the reward model.

Step 2. Training a reward model

A reward model is a language model that sends a ranking signal to the original language model. Basically, it serves as an alignment tool that integrates human preferences into the AI learning process. For example, an AI model generates two answers to the same question, so the reward model will indicate which answer is closer to human preferences.

The main activities involved in the formation of the reward model are as follows:

- Establish the reward model, which can be a modular system or an end-to-end language model.

- Trainthe reward model with a different dataset than the one used when pre-training the language model . The dataset is composed of prompt and reward pairs, i.e. each prompt reflects an expected output with associated rewards to present the desirability of the output.

- Train the model using prompts and reward pairs to associate specific outputs with corresponding reward values.

- Integrate human feedback into reward model training to refine the model. For example, ChatGPT integrates human feedback by asking users to rank the response by clicking on the thumb down or thumb up button

Step 3. Fine-tuning the language model or LM with reinforcement learning

The last and most critical step is the fine-tuning of the natural language understanding model with reinforcement learning. This fine-tuning is essential to ensure that the language model provides reliable responses to user prompts. For this, AI specialists use various reinforcement learning techniques, such asProximal Policy Optimization (PPO) and Kullback-Leibler Divergence (KL).

The main activities involved in refining LM are as follows:

1. The user's input is sent to the RL policy (the adjusted version of the LM). The RL policy generates the response. The RL policy's response and the LM's initial output are then evaluated by the reward model, which provides a scalar reward value based on the quality of the responses.

2. The above process continues in a feedback loop, so that the reward model continues to assign rewards to as many samples as possible. In this way, the RL policy will gradually begin to generate responses in a style close to that of humans.

3. KL Divergence is a statistical method for evaluating the difference between two probability distributions. It is used to compare the probability distribution among current RL policy responses with the reference distribution that reflects the best human-style responses.

4. Proximal Policy Optimization (PPO ) is a reinforcement learning algorithm that efficiently optimizes policies in complex environments involving high-dimensional state/action spaces. It is very useful for refining LM as it balances exploitation and exploration during training. Since RLHF agents have to learn from human feedback and trial-and-error exploration, this balance is vital. In this way, OOP helps to achieve robust and faster learning.

That's all, and that's not bad at all! This is how a complete RLHF training process works, starting with a pre-training process and ending with an AI agent with in-depth fine-tuning.

How is RLHF used in ChatGPT?

Now that we know how an RLHF works, let's take a concrete example: let's discuss how the RLHF is embedded in the training process of ChatGPT - the world's most popular AI chatbot.

ChatGPT uses the RLHF framework to train the language model to provide context-appropriate, human-like responses. The three main steps included in its training process are:

1. Fine-tuning the language model

The process begins byusing supervised learning data to refine the initial language model. This requires building a conversation dataset where AI trainers play the roles of both AI assistant and user. In other words, the AI trainers use suggestions written by the model to write their responses. In this way, the dataset is built with a mixture of model-written and human-generated text, meaning a collection of diverse model responses.

2. Creation of a reward model

After refining the initial model, the reward model comes into play. In this stage, the reward model is created to reflect human expectations. To achieve this, human human annotators classify the responses generated by the model according to human preference, quality and other factors.

The rankings are used to train the second machine learning model, called the reward model. This model is able to predict the extent to which the reward function of a response is aligned with human preferences.

3. Model improvement with Reinforcement Learning

Once the reward model is ready, the final step is to improve the main outputs of the language model and the model through reinforcement learning. Here, Proximal Policy Optimization (PPO) helps the LLM generate responses that score higher on the reward model.

The three steps above are iterative, meaning that they occur in a loop to improve the performance and accuracy of the overall model.

What are the challenges of using RLHF in ChatGPT?

Although RLHF in ChatGPT has improved response efficiency significantly, it presents some challenges, as follows:

- Dealing with different human preferences. Every human has different preferences. So it's difficult to define a reward model that meets all types of preferences.

- Human labor for "Human Feedback" moderation. Reliance on human labor to optimize responses is costly and slow (and sometimes unethical).

- Consistent use of language. Particular attention is required to ensure consistency of language (English, French, Spanish, Malagasy, etc.) while improving responses.

RLHF benefits

From all the discussions so far, it's clear that RLHF plays a leading role in optimizing AI applications. Some of the key benefits it offers are:

- Adaptability: RLHF offers a dynamic learning strategy that adapts to feedback. This helps it to adapt its behavior to real-time feedback/interactions and to adapt well to a wide range of tasks.

- Continuous improvement: RLHF-based models are capable of continuous improvement based on user interaction and further feedback. In this way, they will continue to improve their performance over time.

- Reducing model bias: RLHF reduces model bias by involving human feedback, thus minimizing concerns about model bias or over-generalization.

- Enhanced safety : RLHF makes AI applications safe, because the human feedback loop prevents AI systems from triggering inappropriate behavior.

In short, RLHF is the key to optimizing AI systems and making them work in a way that aligns with human values and intentions.

RLHF Challenges and Limits

The RLHF craze also comes with a set of challenges and limitations. Some of the main challenges/limitations of RLHF are:

- Human bias: Chances are that the RLHF model can cope with human bias, as human raters can unintentionally influence their response biases.

- Scalability: Since RLHF relies heavily on human feedback, it is difficult to scale up the model for larger tasks due to the extensive demands on time and resources.

- Human factor dependency: The accuracy of the RLHF model is influenced by the human factor. Inefficient human responses, particularly in advanced queries, can compromise model performance.

- Question formulation : The quality of the model's answer depends primarily on the formulation of the question. If the formulation of the question is unclear, the model may still not be able to answer appropriately despite extensive RLHF limits.

Conclusion - What does the future hold for RLHF?

Without a doubt, Reinforcement Learning from Human Feedback (RLHF) promises a bright future and is set to continue playing a major role in the AI industry. With the main objective of having AI that mimics human behaviors and mechanisms, RLHF will continue to improve this aspect through new/advanced techniques. In addition, there will be an increasing focus on resolving its limitations and ensuring a balance between AI capabilities and ethical concerns. At Innovatiana, we hope to contribute to development processes involving the RLHF: our moderators are specialized, familiar with the tools and able to accompany you in your most complex AI evaluation tasks.

-hand-innv.png)